Is AI Infrastructure Overbuilt? An Operator's View on the $100B Gamble

- Tony Grayson

- Nov 25, 2025

- 8 min read

Updated: Dec 22, 2025

By Tony Grayson, Tech Executive (ex-SVP Oracle, AWS, Meta) & Former Nuclear Submarine Commander

Published: November 25, 2024 | Last Updated: December 22, 2025

TL;DR — Key Takeaways

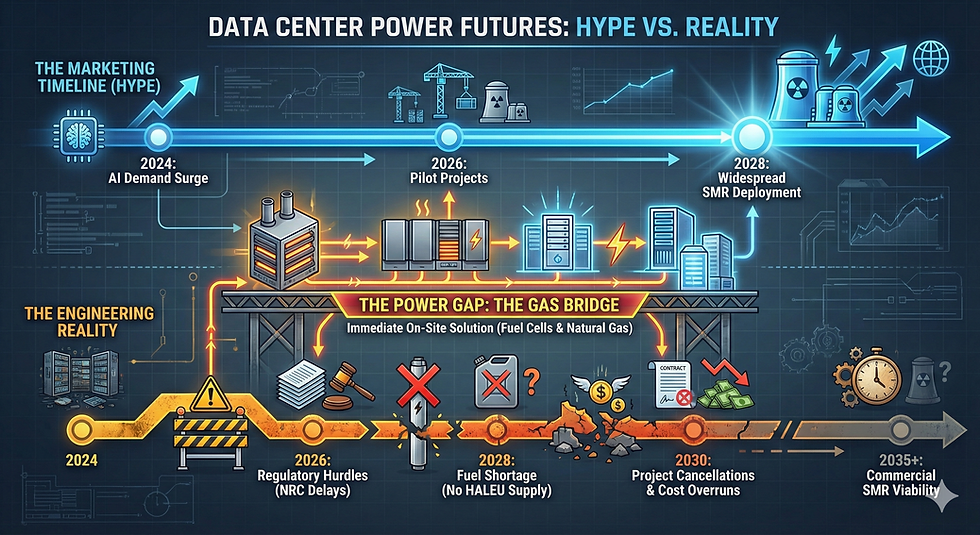

The Kemper Trap is real. When financial ambition outpaces engineering reality, you get $7.5B failures. Today's gigawatt-scale AI roadmaps assume breakthrough cooling that doesn't exist.

9 of 10 megaprojects fail. The Iron Law: over budget, over time, under benefits, over and over again. AI infrastructure is no exception.

Three tests for viable projects: Does thermodynamics support it? Do the unit economics work? Is it built for worst-case, not demo day?

Modular beats monolithic. Deploy in weeks near available power instead of betting billions on grid infrastructure that may never arrive.

I’ve been called a pessimist lately.

I look at the current surge of AI infrastructure investment (specifically the recent wave of $100 billion data center announcements), and I don’t just see progress; I see grid congestion and AI infrastructure being overbuilt.

I look at the financing models, and I see 1999 all over again. I look at the "infinite scale" roadmaps, and I see physics getting ready to snap back.

If you’re reading this and thinking I’m anti-AI, you’re missing the point.

I’m not a pessimist. I’m an operator.

I spent my early career inside a steel tube with a nuclear reactor behind my bunk. As a former U.S. Navy Submarine Commander, I learned that "optimism" gets people killed. You don’t survive by hoping the math works out. You survive by being ruthlessly honest about what the machine can actually do versus what you want it to do.

That didn’t make me cautious. It allowed us to push reactors to their absolute limit in hostile environments because we knew exactly where the edge was.

If you're reading this and thinking I'm anti-AI, you're missing the point. I'm asking whether AI infrastructure is overbuilt, and the physics says yes

The Kemper Trap: Why Physics Limits AI Scaling

There’s a difference between ambition and delusion. One gets you the Apollo Program. The other gets you The Kemper Project.

What is the Kemper Trap? The Kemper Project was a $7.5 billion "clean coal" mega-project that collapsed because executives ignored the engineers. Leadership pushed a chemical process that worked in the lab but became unstable at commercial pressure. They ignored the thermodynamics to save the timeline, and seven years later, the plant was demolished...a monument to wishful thinking.

Right now, too many AI infrastructure strategies are Kemper Projects in waiting. They assume that if you pile enough cash into a server farm, the laws of physics will politely step aside.

They won't.

3 Tests for Testing if your AI Infrastructure is Overbuilt

When I push back on these narratives, I’m not telling you to think smaller. I’m telling you to think sharper.

When I evaluate a project, whether it's at Northstar, or back in my days at Oracle and AWS, I look for three specific viability signals:

1. Thermodynamics: Do they respect the heat?

If a roadmap assumes a miracle in liquid cooling or transmission efficiency by Q3, it’s not a plan. It’s a gamble. Thermodynamics is the only law you can't lobby against.

2. Unit Economics: Does the revenue map to the power?

If it costs you $1.00 in compute and power to generate $0.80 of revenue, scale is not your friend. You aren't growing a business; you're just magnifying your losses. As I’ve written about regarding leadership under pressure, valid economic models must survive the "bad days," not just the hype cycle.

3. Resilience: What is the failure mode?

"Happy path" engineering is easy. I want to know what happens when the grid fluctuates or the supply chain breaks. Real infrastructure resilience is built for the worst-case scenario, not the demo day.

Conclusion: Building Real Things

I’ve heard "that’s impossible" my whole career.

I heard it when we talked about running nuclear reactors quietly enough to avoid detection by sonar. I heard it when we started deploying modular data centers in days instead of months.

But there is a difference between people who say "that's impossible" because they lack imagination, and engineers who say "that won't work" because they've done the math.

Ignore the first group. Listen to the second.

We need more moonshots. We need massive clusters. We need to guide AI infrastructure investment toward projects that can actually survive contact with reality. But we need to do it on solid ground, not on a bubble of cheap money and bad math.

Dream without limits. Build with discipline.

That isn't pessimism. That's the only way we actually get to the future.

We need massive clusters. But we need to ask hard questions about whether AI infrastructure is overbuilt before we pour concrete on bad math.

Frequently Asked Questions (FAQ)

Who is Tony Grayson?

Tony Grayson is President & General Manager of Northstar Enterprise + Defense, former Commanding Officer of USS Providence (SSN-719), and recipient of the Vice Admiral James Bond Stockdale Award. He previously led global infrastructure as SVP at Oracle, AWS, and Meta. Expert in data center thermodynamics and megaproject risk management.

Is AI infrastructure overbuilt?

Many current AI infrastructure projects show signs of overbuilding. The $100B+ data center announcements assume infinite scaling, cheap power, and breakthrough cooling technologies that don't yet exist at commercial scale. Constellation Energy's CEO warned in 2025 that utility demand projections in just three markets—PJM, MISO, and ERCOT—exceed credible projections for the entire country. Vistra's CEO noted demand could be overstated by 3-5x in some jurisdictions. When financial ambition outpaces engineering reality, you get stranded assets—the same failure mode that killed the $7.5B Kemper Project.

What is the Kemper Trap in AI?

The Kemper Trap is a term I use to describe infrastructure projects that fail because financial ambition outpaces engineering reality. In AI, this refers to gigawatt-scale data center roadmaps relying on power and cooling technologies that don't yet exist at commercial scale. The term derives from the Kemper Project—a $7.5 billion "clean coal" facility in Mississippi that was demolished in October 2021 after its coal gasification technology, which worked in the lab, proved unstable at commercial pressure. Today's AI infrastructure projects risk the same fate when roadmaps assume miracle breakthroughs in liquid cooling or transmission efficiency by Q3.

Why do megaprojects fail?

According to Oxford professor Bent Flyvbjerg, nine out of ten megaprojects go over budget. Rail projects average 44.7% cost overrun with demand overestimated by 51.4%. McKinsey's study of 48 troubled megaprojects found poor execution responsible for cost and time overruns in 73% of cases. Megaprojects fail when executives ignore engineers and prioritize timelines over physics. The Kemper Project collapsed because leadership pushed a chemical process that worked in the lab but became unstable at commercial pressure—they ignored thermodynamics to save the timeline.

What are the risks of AI infrastructure investment?

The three primary risks are: (1) Thermodynamics—assuming miracle breakthroughs in cooling or transmission efficiency that don't exist; thermodynamics is the only law you can't lobby against. (2) Unit economics—spending $1.00 in compute/power to generate $0.80 in revenue, which magnifies losses at scale rather than growing a business. (3) Resilience—building only for the "happy path" without planning for grid fluctuations, supply chain failures, or equipment shortages. Real infrastructure resilience is built for worst-case scenarios, not demo day presentations. For more on leadership under pressure, see Systems Leadership: The Six-Factor Formula.

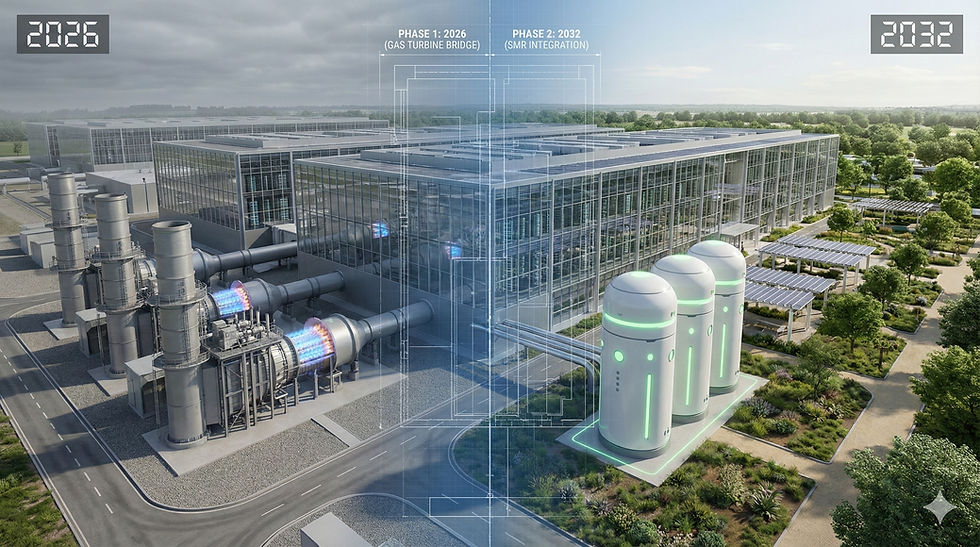

Why are modular data centers necessary for AI?

Unlike massive centralized facilities requiring years of grid upgrades, modular data centers deploy in smaller increments closer to available power sources (like behind-the-meter generation). This reduces transmission strain and shortens AI deployment timelines from 18-24 months to weeks. Modular facilities can also be deployed near "stranded gas"—natural gas that isn't currently being used—because building the power plant is actually cheaper than the servers going into the facility. This flexibility avoids the Kemper Trap of betting billions on infrastructure that may never come online.

How does military command experience apply to data centers?

Both nuclear submarines and modern data centers are high-stakes, mission-critical environments where thermodynamics, power, and redundancy are life-or-death variables. An "Operator" mindset—derived from military command—prioritizes failure mitigation and physical reality over optimistic financial forecasting. In a submarine, "optimism" gets people killed; you don't survive by hoping the math works out. This same ruthless honesty about what the machine can actually do versus what you want it to do separates viable AI infrastructure from Kemper Projects in waiting.

What was the Kemper Project?

The Kemper Project was a $7.5 billion "clean coal" power plant in Kemper County, Mississippi, built by Southern Company's Mississippi Power subsidiary. Construction began in 2010 with an original budget of $2.4 billion and May 2014 completion target. The plant used Transport Integrated Gasification (TRIG) technology to convert lignite coal into synthetic gas while capturing 65% of CO2 emissions. After chronic delays, cost overruns, and technical failures—including coal dust suppression issues, tube leaks, and undersized nitrogen systems—the coal gasification was suspended in June 2017. The gasification structures were demolished in October 2021. The facility now operates as a natural gas plant—something that could have been built for under $1.5 billion.

What is the Iron Law of Megaprojects?

The Iron Law of Megaprojects, coined by Oxford professor Bent Flyvbjerg, states: "Over budget, over time, under benefits, over and over again." His research shows nine out of ten megaprojects have cost overruns, with overruns of 50%+ being common—the Sydney Opera House ran 1,400% over budget, Denver International Airport 200%, Boston's Big Dig 220%. Large-scale IT projects are even riskier: one in six becomes a statistical outlier with average overruns of 200% for outliers.

How much power do AI data centers consume?

A typical AI data center uses as much electricity as 100,000 households, and the largest under development will consume 20 times more, according to the International Energy Agency. AI-driven deployments are pushing sustained rack densities to 44-150 kW—up from the historical 6-16 kW range that persisted from the late 1980s through early 2020s. Hyperscale facilities now range 40-60 kW per rack, while AI data centers exceed 200 kW. This represents a structural break that fundamentally strains conventional mechanical and electrical systems, making the Kemper Trap particularly relevant for projects assuming breakthrough cooling technologies.

What are stranded assets in AI infrastructure?

Stranded assets are infrastructure investments that become worthless before recovering their costs. In AI infrastructure, this means transmission lines, power plants, and gas pipelines built to meet data center demand that never materializes. IEEFA warns that if utilities build infrastructure for inflated demand projections, stranded costs will be passed to existing electricity consumers. A 2025 watchdog report found consumers on the largest U.S. electric grid will pay $16.6 billion just to secure future power supplies for data centers from 2025-2027. AEP Ohio now requires data centers to pay for 85% of claimed energy needs even if they use less, specifically to mitigate stranded asset risk.

Why are utilities concerned about AI data center demand?

Utility executives are raising alarms about inflated demand projections. Constellation Energy CEO Joe Dominguez warned investors: "It's hard not to conclude that the headlines are inflated." Vistra Energy CEO James Burke noted demand could be overstated 3-5x in some jurisdictions because developers shop the same projects to multiple utilities seeking fastest power access. GridUnity's CEO reported that 30% of proposals received by one large utility client were canceled in 2024. Grid Strategies found nationally, utilities are planning for about 50% more data center demand than the tech industry projects.

What is behind-the-meter power generation for data centers?

Behind-the-meter power generation means building power sources (natural gas turbines, solar, batteries, or eventually small modular reactors) directly at the data center site rather than drawing from the utility grid. This approach bypasses years-long grid interconnection queues and transmission constraints. Developers are increasingly citing data centers near "stranded gas"—unused natural gas—because building the power plant on-site is cheaper than waiting for grid upgrades. Behind-the-meter generation is essential for avoiding the Kemper Trap: it allows incremental, physics-respecting deployment rather than betting billions on grid infrastructure that may never arrive. For more on gas-to-nuclear bridge strategies, see The SMR Market Correction.

____________________________________

Tony Grayson is a recognized Top 10 Data Center Influencer, a successful entrepreneur, and the President & General Manager of Northstar Enterprise + Defense.

A former U.S. Navy Submarine Commander and recipient of the prestigious VADM Stockdale Award, Tony is a leading authority on the convergence of nuclear energy, AI infrastructure, and national defense. His career is defined by building at scale: he led global infrastructure strategy as a Senior Vice President for AWS, Meta, and Oracle before founding and selling a top-10 modular data center company.

Today, he leads strategy and execution for critical defense programs and AI infrastructure, building AI factories and cloud regions that survive contact with reality.

Read more at: tonygraysonvet.com

Comments